Discover the power of web scraping and how it can be an invaluable tool in today's data-driven world. This article breaks down how webpages are constructed using unstructured data in HTML and demonstrates how to use Beautiful Soup and Requests to scrape a website.

Key Insights

- The tutorial emphasizes that any data visible on a website can be scraped, mined, and converted into a dataframe.

- The structure of a webpage comprises several nodes such as the document node, HTML elements, HTML attributes, and text nodes. Text nodes are usually the primary targets in web scraping projects.

- HTML elements are defined by opening and closing tags, and often possess attributes that dictate certain properties and behaviors of the webpage.

- The tutorial advises the use of IDs to create unique reference points and differentiate similar HTML elements within a page.

- The demonstration showcases how Beautiful Soup and Requests can be used to scrape data from a website and transfer it into a Pandas dataframe.

- Part II of the web scraping series promises to delve into a web scraping technique called XPath and a web scraping workflow using a framework known as Scrapy.

Get started with web scraping in this Python web scraping tutorial. Learn the fundamentals of HTML that you'll need to understand a site structure and effectively scrape data from the site.

One of the largest and most ubiquitous sources of data is all around us. It is the web. If there’s anything I want you to remember after reading this tutorial, it is this: “If you can see it on a website, it can be scraped, mined, and put into a dataframe.” However, before we begin talking about web scraping, it is important to cover how webpages are constructed as unstructured data in HyperText Markup Language (HTML). In this article, we will familiarize ourselves in reading and navigating HTML. Afterwards, we will use Beautiful Soup and Requests to scrape a website called Datatau. In part II of the Web Scraping series, we will cover a technique called XPath and look at a web scraping workflow using a framework called Scrapy.

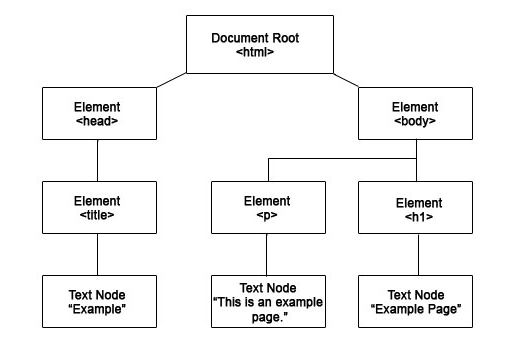

In HTML document object model (DOM), everything is considered a node:

- The document is a document node.

- HTML elements are element nodes.

- All HTML attributes are attribute nodes.

- Text inside HTML elements are text nodes.

- This is what people usually target in web scraping projects. The actual text nugget after digging through all the hierarchy of nodes.

HTML Hierarchy

If you visualize the node above, it looks like this. The document node is the root and there will be multiple elements that each contain smaller elements like title, paragraph, and h1. Each of these smaller elements will contain text nodes that people usually mine. Let’s transform the nodes into actual code so we can familiarize ourselves with HTML.

Elements

Elements have opening and closing tags which are defined by namespaced and encapsulated strings. The namespaced tags must be the same. Here are some examples!

<title>I am a title.</title>

<p>I am a paragraph.</p>

<strong>I am bold.</strong>

It is common for websites to have several tags of the same element on a single page. Front-end developers assign ID values to namespaces to differentiate them and make unique reference points. Here is an example of assigning ID values and I’ve color coded them to make it easier for you to distinguish the different parts of the tag:

<titleid = 'title_1'>I am the first title.</title>

<pid = 'paragraph_1'>I am the first paragraph.</p>

<title id = 'title_2'>I am the second title.</title>

<pid = 'paragraph_2'>I am the second paragraph.</p>

Parents and Children

Elements can have parents and children. They can also be both parents and children too depending on how you reference it. I’ll show you an example:

<bodyid = 'parent'>

<divid = 'child_1'>I am the child of 'parent.' # also parent of child_2

<divid = 'child_2'>I am the child of 'child_1.' # also parent of child_3

<divid = 'child_3'>I am the child of 'child_2.' # also parent of child_4

<divid = 'child_4'>I am the child of 'child_3.'</div> # not a parent

</div>

</div>

</div>

</body>

Depending on how you reference each tag, they can be a parent or a child. Notice how the gray comments that note how tags can also be a parent start with a hashtag. Comments start with hashtags and it is common to read code or HTML docs with comments in them. Commenting your code makes it easier for other developers to follow and read your code.

Attributes

This is the last tidbit of vocabulary you’ll have to know when you’re dealing with HTML and web scraping. Attributes describe the properties and characteristics of elements. They affect how the page behaves or looks when it is rendered by the browser. The most common element is an anchor element and anchor elements often have a “href” element that tells the browser where to go when a user clicks it. To put it in English, it’s a link. Here’s an example anchor element:

<ahref = "https://www.youtube.com/watch?v=dQw4w9WgXcQ">Click Me!</a>

This will look like this on your browser: Click me!

Now that we’re all on the same page (get it?) Let’s get scraping!

Installing Requests and Beautiful Soup to Extract Data

First thing you have to do is install the required packages. Go to your terminal or command prompt and type:

- Pip install beautifulsoup4

- Pip install lxml

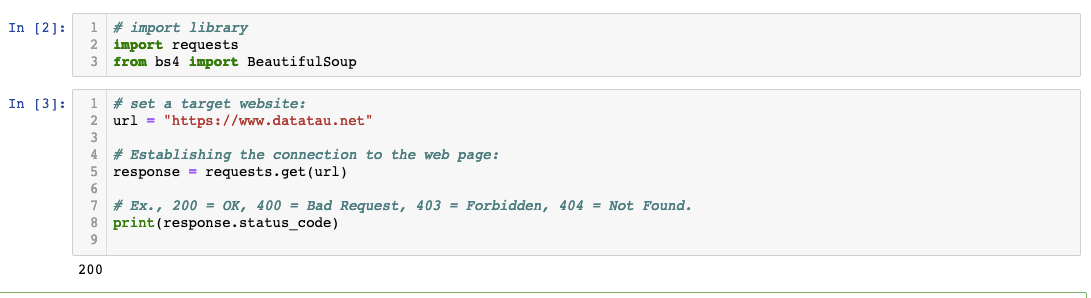

Once you have successfully installed it, go to your IDE (I’ll be using Jupyter Notebook) to import the libraries and set a target website. Let’s use www.datatau.net and see if we can scrape article titles.

Data Import and URL Request

It’s important to check response.status_code. Some websites will block your requests. Make sure you get a “200”.

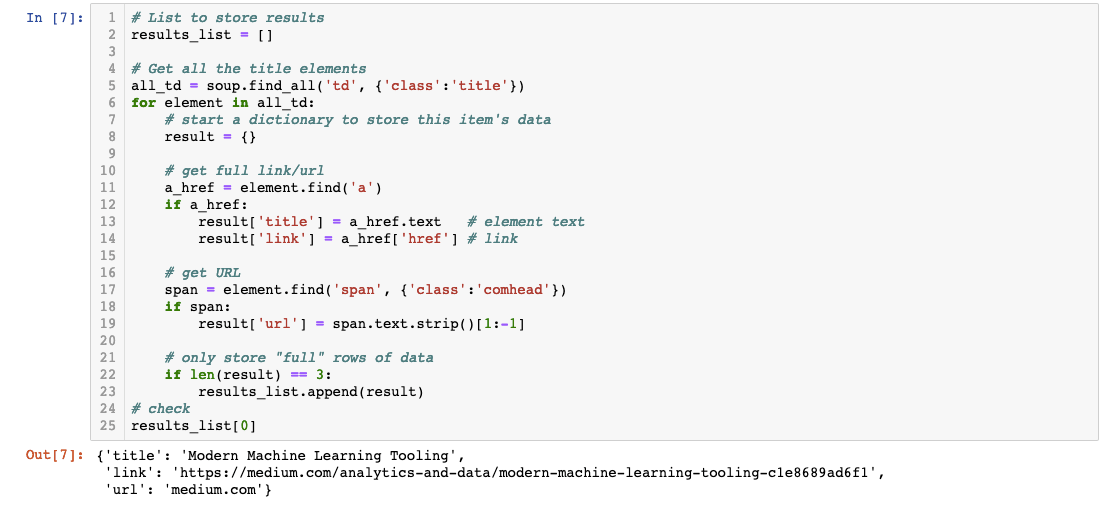

Scraping Code

Looks good! We just scraped the title, link, and origin URL. Let’s put all of this in a Pandas dataframe next.

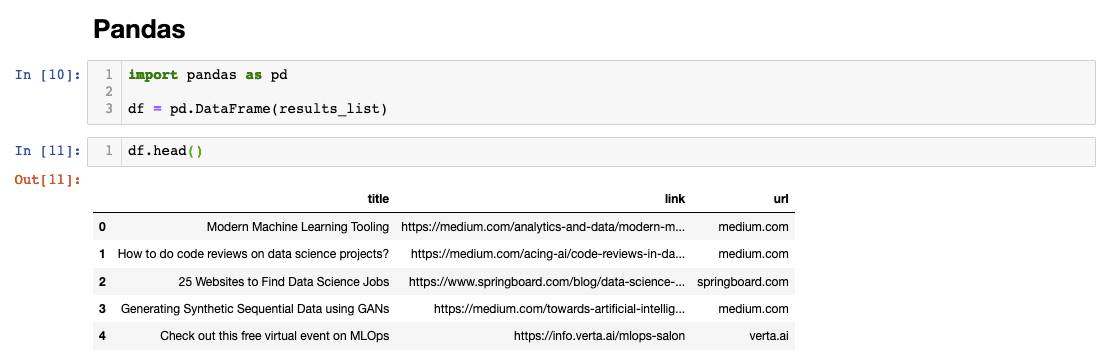

List to Pandas Dataframe

Once we have a list of dictionaries, we can simply import Pandas and use the DataFrame method to convert it into a dataframe.

Great job if you were able to follow along and scrape the website! I think the scraping code went from 0-60 real quick so don’t beat yourself up if you get stuck. There’s a lot of great resources out there and our Python course can definitely get you up to speed. Stick around for Part II of the web scraping series where we’ll learn about Xpath.